Using deep learning to classify objects

We built a mobile app to help you identify the objects you're looking at.

Among the millions of images uploaded online every day, only a few of them are processed. Humans are not efficient enough for labeling a large amount of data. As a group of 5 students, we decided to automate this process by retrieving images’ information with machine learning.

We built SmartSight — a system that provides information about any images. It exposes an API calling a classification engine, demonstrated with an Android application.

Check the organization on GitHub.

Conception

Global architecture

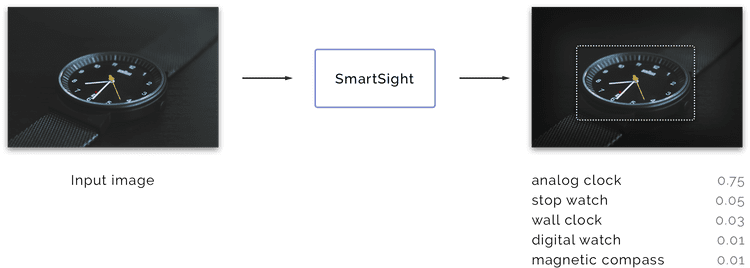

The basic idea of our solution is fairly simple:

- We send an image as input to our system

- Our system treats and classifies this image

- The system outputs a set of predicted classes

The SmartSight box, representing the engine, is a black box that is only aware of the inputs that come in, and then returns a batch of titles associated with a score. The titles represent the classes that the engine deducted. The scores represent the confidence of the engine concerning this result.

Detailed architecture

We had from the start a clear idea of how the different services would work together to form a complete system. We, however, dealt with two different solutions:

- Embed the whole system into the mobile device

- Design a micro-service system communicating with the mobile device

Solution (1) could be seen as the easiest to implement. We would only need to code the machine learning engine onto the mobile application — without any internet connection — leading to a theoretically fastest result. The downside of this solution is that the computing power of each mobile device varies a lot. The type of algorithm used for classification is cost effective and so battery drainer. Another drawback is that the whole data needed to classify the image would need to be stored on the device, which is not fair for the user.

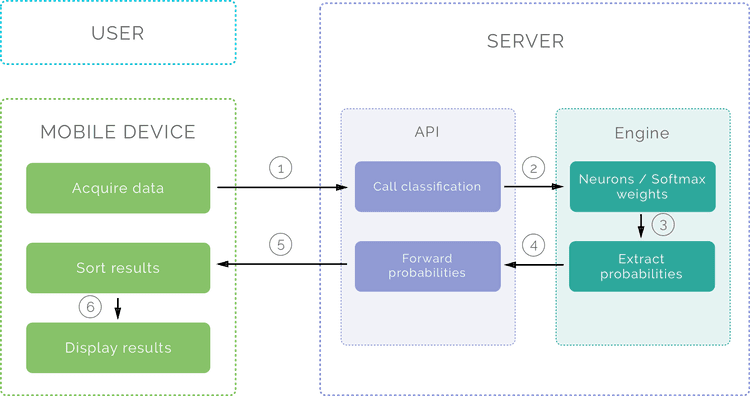

We opted for solution (2) which is a modular architecture containing an API, an engine and the mobile application.

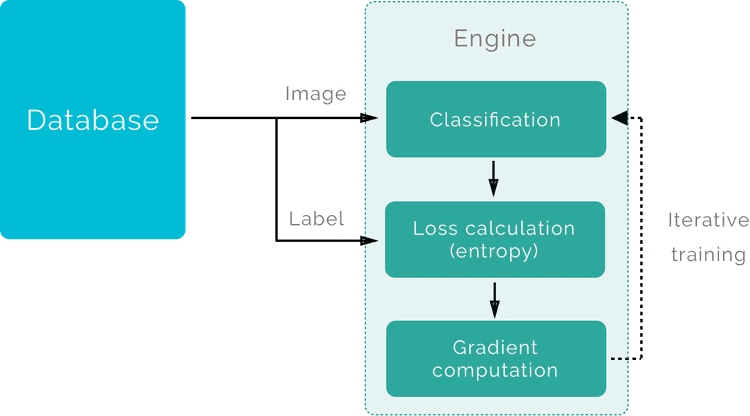

Two parts are to be distinguished: the training phase and the utilization phase. During the training phase, the engine tries to find the best parameters (the weights) using a training algorithm (Back Propagation). The utilization phase comes once the system is optimal.

Once trained, the flow of this architecture is the following:

- The mobile device sends the image to the server’s API

- The server receives the image and runs the engine with the image as input

- The engine processes the image and sends the results back to the parent process

- The server sends the response to the mobile

This solution takes advantage of the server’s computing power to execute the classification script that we called the engine. Thus, the time we lose sending the image from the device to the server is compensated with this more powerful engine. The downside of this solution is that it needs an internet connection to work.

Execution

The SmartSight project has fully been open-sourced from the start. All the assets and the code are available on the GitHub organization. This environment is highly efficient because it allows the team to create each module as a GitHub repository.

SmartSight API

The SmartSight API is the server of the system. This is the interface that links the Android application and the core engine. The server was hosted locally during development and on its own server in production,

This service has been versioned and offers a single route POST /v1/classify that waits for an image as input and returns predictions about the latter (see full documentation). It is a good practice to version an API to not introduce breaking changes: if we change drastically the output of the route, we would create a second version of our API so that the applications of the clients using the version 1 of the classify route still work.

{

"meta": {

"type": "success",

"code": 200

},

"data": [

{

"score": 0.884148,

"class": "pizza, pizza pie"

},

{

"score": 0.002444,

"class": "butcher shop, meat market"

},

{

"score": 0.00208,

"class": "carbonara"

},

{

"score": 0.002078,

"class": "trifle"

},

{

"score": 0.001326,

"class": "pomegranate"

}

]

}POST /v1/classifyThis API has been developed using NodeJS, which is JavaScript on the server side. Koa is an abstraction of the NodeJS http module that was used to make the API more enjoyable to write. This library uses JavaScript promises and asynchronous functions to avoid using callbacks and make the code easier to reason about.

Since this API depends on the core Python engine, we need to reference this engine in the API. We used the very convenient Git Submodules in order to share a clean development environment.

SmartSight Android

The SmartSight Android application targets the Android API level 21, corresponding to devices running at least Android 5.0. It communicates with the SmartSight API by sending the image taken with the device’s camera and displays the results back on the screen.

The application was developed with the Kotlin language, which is a new statically-typed programming language by Jetbrains running on the JVM. It is compiled to JVM bytecode (Java and Android), and JavaScript. We chose to use Kotlin to avoid the verbosity of Java. It makes our code cleaner and easier to reason about, including: null safety (no more NullPointerException), type inference, functional programming support, string interpolation, unchecked exceptions, extension functions and operator loading.

SmartSight engine

The SmartSight engine is the core of the system. It is an image recognition algorithm based on Tensorflow and trained on ImageNet data set. It outputs the results of the predictions as a JSON array of objects.

[

{

"class": "pizza, pizza pie",

"score": 0.884148

},

{

"class": "butcher shop, meat market",

"score": 0.002444

},

{

"class": "carbonara",

"score": 0.00208

},

{

"class": "trifle",

"score": 0.002078

},

{

"class": "pomegranate",

"score": 0.001326

}

]Each object of the JSON array contains two keys: class and score. The class refers to the object recognized by the algorithm as a string. The score is a confidence ratio about this prediction as a float number.

SmartSight art

Eventually, we stored all assets for the project in a SmartSight Art repository. This repo is useful to keep track of design changes overtime, but overall to use consistent logos and assets in all the SmartSight modules.

All the assets have been designed as vectors, meaning they can be enlarged and zoomed as much as needed, without pixelating.

Tests

Functional tests

All along the development of the SmartSight API, tests drove the development. We call this strategy Test Driven Development (TDD). Since the API was developed in NodeJS, we used the futuristic test library AVA to write our tests.

These tests made sure the API returns the correct status codes and the correct format of data.

test.cb(`POST ${endpoint} with JPG image returns 200`, (t) => {

api

.post(endpoint)

.attach('file', 'test/fixture/pizza.jpg')

.expect(200)

.end((err, res) => {

if (err) {

throw err

}

t.end()

})

})Performance tests

The classification core performs more than 95% of successful predictions on the ImageNet database. The system is not able to label an object it has not been trained with.

We conducted several tests, searching for an interesting image preprocessing phase that would improve the system’s performance. The important features that we took into account are luminosity, noise, blur and compression. When we test on only one image, it gives a unexploitable result that doesn’t really make sense. The score of the image varies a lot depending on its original settings. A low luminosity cat will perform a better score if it has been trained on a low luminosity dataset.

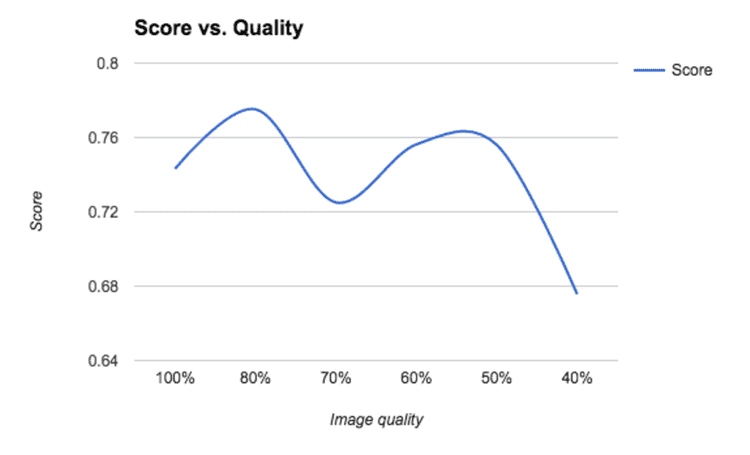

We perceived, most of the time, an increase of score around 80% of image compression. This could be explained by a lower accuracy that ignores the not meaningful details. Moreover, this data compression speed up the processing time.

These tests are not relevant enough to conclude anything yet, as it would be more interesting to test it over a large amount of the database.

Achievements

The most important aspect of our project was the development of a classification system. We created a simple, intuitive and user-friendly Android application that uses the system by communicating with a server that runs the classification engine. Our reusable API is exposed and open source so that developers can contribute to our system.

Encountered problems

Our initial expectation was to create a user-focused application. The user would take a picture, if it’s not referenced, would upload the image to the system to add new classes to the classification system.

However, in order to add classes to the system, the entire classifier needs to be retrained from scratch. This process takes time and requires strong computations.

We met Martin Görner, machine learning expert, during a TensorFlow meetup in Paris. He advised us to have a look at transfer learning to bypass this issue.

After studying this perspective, we found out that it doesn’t actually solve our problem: transfer learning allows to specialize the system for new precise classes by replacing the last layers of the neural network, but not to actually broaden the classes range.

As of now, we are not able to improve the scope of objects without significantly lowering performance.